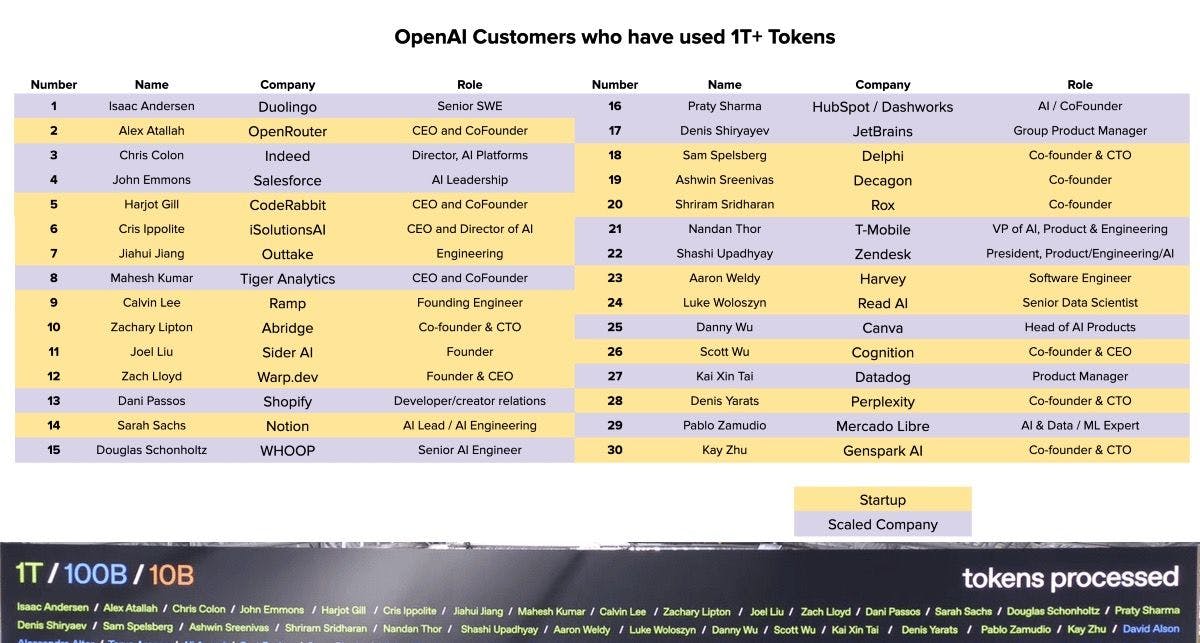

Large language models are a core part of modern digital products. At the top of this wave are the companies that have burned through more than one trillion OpenAI tokens. The table, extrapolated from a recent OpenAI demo day slide and consequently started circulating on X/Twitter, lists 30 customers who collectively consumed an eye-watering amount of AI API calls. Below is a deeper look at what each company does, how they deploy generative AI, and a brief look into why and how their token usage may be so high. The list is ordered alphabetically by company, whereas the image trending above was ordered alphabetically by the company representative's last name.

Abridge

Abridge is an ambient AI company focused on healthcare documentation. It records doctor-patient conversations and summarizes them into structured clinical notes (SOAP notes) using advanced natural-language processing and machine-learning models, generating real-time summaries, action items, and key medical terms. With thousands of clinicians using Abridge to transcribe and summarize long consultations, token counts scale rapidly. Learn more from this overview of Abridge AI.

Canva

Canva is a visual-communication platform with 175 million monthly users. An OpenAI case study describes how Canva’s Magic Studio uses GPT-4 for multimodal content generation, enabling features like Magic Write (text generation), Magic Design (creating presentations and videos), Magic Switch (translating and adapting content), and more. With millions of users generating images, videos, and copy, token counts scale dramatically. Read the Canva case study.

Cognition

Cognition AI is the startup behind Devin, billed as the world’s first AI software engineer. Devin, powered by OpenAI’s GPT-4 foundation model, can autonomously tackle complex engineering tasks such as writing code, debugging, and deploying applications. Running agentic loops to plan, code, and test software requires long context windows and repeated reasoning steps, driving token usage. More insights are available in Voiceflow’s analysis.

CodeRabbit

CodeRabbit provides automated code review and quality-assurance services. It uses OpenAI’s models to parse diffs, reason about program structure, and propose fixes; continuous code review across thousands of repositories leads to extremely large token counts. Check the CodeRabbit product page for more.

Datadog

Datadog is an observability platform used by developers and operations teams. At its 2023 Dash conference, the company introduced Bits AI, a digital assistant built on OpenAI’s ChatGPT that surfaces real-time recommendations for issues like alerts and anomalies, and launched a product called LLM Observability to monitor custom LLMs. Because Bits AI is integrated into dashboards monitoring thousands of services and logs, and because LLM Observability analyzes prompts and responses, the token tally is immense. The launch is covered in DevOps.com.

Decagon

Decagon provides fully automated customer support. It uses GPT-3.5, GPT-4, and GPT-4o to power agentic bots that handle millions of customer conversations across companies such as Duolingo, Notion, and Substack. These bots handle the entire support lifecycle – answering questions, resolving issues, and escalating when necessary – causing token counts to soar. See the Decagon story.

Delphi

Delphi builds personalized “Digital Minds” chatbots for creators and influencers. Its retrieval-augmented generation (RAG) pipeline ingests podcasts, PDFs, and social media to train these chatbots and uses a managed vector database to store more than 100 million embeddings. Training and updating thousands of digital personas across diverse content sources drives high token usage. For more details, read this report on Delphi’s scaling with RAG.

Duolingo

Duolingo is the world’s largest language-learning app, known for its gamified lessons and broad distribution. It partnered with OpenAI to integrate GPT-4 into Duolingo Max, which offers features like “Explain My Answer” and Role Play, allowing learners to have conversations with a virtual barista or tour guide and receive detailed feedback. These personalized, context-aware exercises are only possible because every learner query and response is tokenized, explaining why the company racked up more than a trillion tokens. For more context, read this article on Duolingo’s AI features.

Genspark AI

Genspark AI (also known as Sparks) is a Chinese AI startup founded by former Baidu executives Eric Jing and Kay Zhu. The company employs a Mixture-of-Agents architecture that orchestrates over 80 real-world tools to accomplish user tasks, has raised $160 million, and attracted more than two million users. See the Genspark introduction.

Harvey

Harvey builds secure generative-AI tools for law, tax, and finance. Harvey partnered with OpenAI to develop a custom case-law model; the platform can draft documents, answer questions about litigation scenarios, and identify discrepancies in contracts. With big law firms generating and reviewing thousands of long documents, token volumes are enormous. Read the Harvey story.

HubSpot/Dashworks

HubSpot acquired Dashworks, an AI-powered workplace search assistant. Dashworks connects data across apps, performs deep search, and summarizes important details. Its team will help build AI assistants for every go-to-market worker. Dashworks’ ability to ingest and reason over corporate knowledge bases uses large context windows; integrating this into HubSpot’s Breeze Copilot multiplies token usage across thousands of customers. Further details are in HubSpot’s announcement.

iSolutionsAI

iSolutionsAI builds custom machine-learning models and AI chatbots for businesses. Integrating OpenAI models into each client’s workflow results in many long context windows (customer queries, CRM histories, etc.), which explains the high token volume. More details are available on the iSolutionsAI services page.

Indeed

Indeed operates the world’s largest job marketplace. Its engineering team has long used machine-learning algorithms to match job-seekers and employers, and over the past few years, it has added generative AI models to improve these recommendations. Matching millions of resumes to millions of job descriptions requires huge inference volumes. For more context, read Indeed’s CIO interview.

JetBrains

JetBrains makes popular developer tools like IntelliJ and PyCharm. Its AI Assistant is powered by OpenAI’s API, which the company integrated because of its advanced reasoning and ease of use. The assistant helps millions of developers generate tests, refactor code, write commit messages, and clarify errors. Given the scale of its user base, even simple code suggestions translate into huge token counts. See JetBrains’ AI Assistant FAQ.

Mercado Libre

Mercado Libre is Latin America’s largest e-commerce and fintech company. The company built Verdi, an AI development platform that leverages GPT-4o and other models to handle complex tasks like customer service mediation, fraud detection, localization, and summarizing reviews. Verdi already resolves 10 % of mediation cases and is on track to mediate $450 million in disputes annually. Learn more from the Verdi overview.

Notion

Notion is a connected workspace for writing, planning, and knowledge management. It transformed its product into a deeply AI-powered platform by integrating OpenAI models to summarize pages, generate writing, and answer questions across the workspace. The combination of a massive user base and generative features like Notion AI leads to an enormous token footprint. See the OpenAI story.

OpenRouter

OpenRouter runs a marketplace-style API that allows developers to access hundreds of large language models through a single endpoint. The platform supports OpenAI, Anthropic, Google, Meta, and Mistral models and automatically handles fallback and cost-efficient routing. Because every request made by thousands of client applications is proxied through OpenRouter, the company’s cumulative token count quickly ballooned. See the OpenRouter overview.

Outtake

Outtake offers AI-driven cybersecurity automation. Its agentic platform detects and remediates attacks in hours, automating both detection and response. Cybersecurity workloads require analyzing extensive logs and telemetry streams; using OpenAI models to triage, explain alerts, and generate remediation scripts consumes billions of tokens. See the Outtake cybersecurity story.

Perplexity

Perplexity AI is an AI-powered search engine that answers questions with cited sources. The Perplexity Pro subscription includes advanced models from OpenAI, such as GPT-5 and OpenAI’s o-series models, alongside Anthropic and open-source models. When users perform pro searches, the system may call OpenAI models for deep reasoning and web search. For more on the available models, see the Perplexity Pro help article.

Ramp

Ramp is a fintech company that automates corporate spending. Its AI agents automate expense reporting, procurement, and bookkeeping. These agents, built on OpenAI models, must parse and interpret receipts, emails, and invoices at scale; each document contributes tokens, and the aggregated volume from thousands of customers quickly exceeds a trillion. Details are covered in PYMNTS’ report.

Read AI

Read AI creates meeting-productivity tools that automatically summarize conversations, emails, and chats. It listens to Zoom or Google Meet calls and quickly summarizes conversations, providing transcripts, coaching, and condensing messages across email threads. Real-time transcription and summarization of meetings and message histories for corporate customers lead to huge token consumption. See the SiliconANGLE article.

Rox

Rox is building an AI-driven revenue-operations platform. It unifies fragmented go-to-market data into a single system of record and uses swarms of OpenAI-powered agents to deliver insights and automate workflows for sales teams. Data wrangling and continuous agentic processing across millions of sales interactions lead to heavy token usage. For the full vision, read the OpenAI profile.

Salesforce

Salesforce is a CRM giant that embeds AI deeply in its products. Its Einstein GPT offering is built on OpenAI’s language models and generates personalized content (emails, sales actions, code) directly in Salesforce dashboards. The system automates routine tasks like drafting emails and summarizing records for millions of users, driving extremely high token usage. Learn more from the Salesforce Einstein overview.

Sider AI

Sider is a browser extension that acts as an all-in-one AI assistant. It gives users access to multiple AI models (ChatGPT, Claude, Gemini), summarizes articles, conducts group chats, summarizes YouTube videos, and provides tools like Wisebase (knowledge base), ChatPDF, and AI Slides. Supporting such a wide array of functions for millions of users leads to huge numbers of tokenized prompts and responses. For a full list of features, see the Sider AI review.

Shopify

Shopify powers more than a million online stores. OpenAI partnered with Etsy and Shopify to allow users to purchase items directly through ChatGPT, with Shopify merchants able to sell inside the conversation without redirects. Combine that with Shopify Magic, the platform’s generative-AI assistant for product descriptions and marketing copy, and it’s clear why token usage is so high. Read the Reuters announcement.

T-Mobile

T-Mobile is leveraging generative AI for customer support. It is building IntentCX, a real-time, intent-driven AI-decisioning platform with OpenAI that will provide next best actions and reduce service calls by up to 75 %. Analyzing customer sentiment and guiding both virtual and human agents through tens of millions of interactions generates massive token usage. More information is available in CX Today’s article.

Tiger Analytics

Tiger Analytics is a consulting firm specializing in advanced analytics and AI. In 2024, the company announced a strategic collaboration with AWS to accelerate the development and deployment of generative AI solutions, combining its domain expertise with AWS’s infrastructure. Building custom generative models for enterprise clients – including retrieval-augmented generation pipelines and fine-tuned GPT variants – drives significant token usage. Read the CRN India press release.

Warp.dev

Warp.dev builds a modern, AI-powered terminal. Warp integrates AI to suggest commands, generate code, troubleshoot errors, and provide an IDE-like experience. Features like Warp Drive allow users to save reusable commands and interactive runbooks, while the AI assistant can plan and execute complex workflows. Each interaction with the AI invokes language models, resulting in high token consumption. See the Warp AI overview.

WHOOP

WHOOP is a wearable fitness company that offers personalized health coaching. The company’s GPT-4-powered coach answers questions about workout routines, recovery, and sleep using data collected by the WHOOP strap, delivering always-on guidance. Since each user’s 24/7 sensor data is converted into prompts, token usage naturally skyrockets. Read the OpenAI success story.

Zendesk

Zendesk is a customer-experience platform. The company partnered with OpenAI and rolled out GPT-4o to all Zendesk AI customers, allowing generative replies that are three times faster and more accurate at generating human-like answers from knowledge bases. By powering help-desk bots, agent assist, and admin tools across thousands of businesses, Zendesk’s token consumption climbs rapidly. See the Zendesk news release.

Is Heavy Token Usage Good or Bad?

At the end of the list, one must ask whether burning through trillions of tokens is a sign of healthy growth or runaway spending. Technology journalist Lauren Goode quipped,"This is sort of like spending a million bucks gambling and the casino gives you a free hotel room for the night” — a colorful way of noting that burning compute doesn’t guarantee long-term value. Some of these AI companies have spent billions of tokens on OpenAI APIs, which may impress peers but could spook investors if the costs outpace revenue.

On the positive side, heavy token usage usually indicates strong product adoption and deep integration of generative AI into workflows, which can justify higher valuations through improved productivity and customer satisfaction. The flipside is that reliance on expensive third-party models could compress margins and expose companies to vendor-pricing risks.

Image credit: Deedy Das. Data extrapolated from OpenAI demo day one trillion+ tokens consumed slide.