Authors:

(1) Gonzalo J. Aniano Porcile, LinkedIn;

(2) Jack Gindi, LinkedIn;

(3) Shivansh Mundra, LinkedIn;

(4) James R. Verbus, LinkedIn;

(5) Hany Farid, LinkedIn and University of California, Berkeley.

Table of Links

Abstract

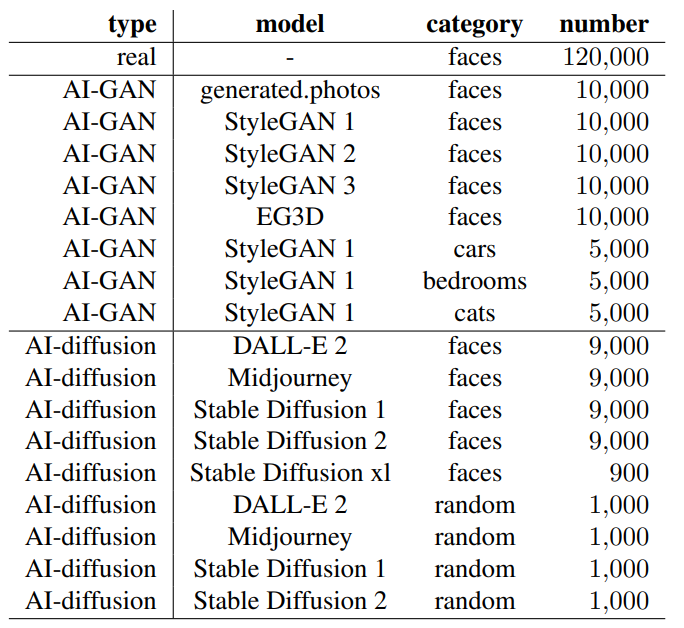

AI-based image generation has continued to rapidly improve, producing increasingly more realistic images with fewer obvious visual flaws. AI-generated images are being used to create fake online profiles which in turn are being used for spam, fraud, and disinformation campaigns. As the general problem of detecting any type of manipulated or synthesized content is receiving increasing attention, here we focus on a more narrow task of distinguishing a real face from an AI-generated face. This is particularly applicable when tackling inauthentic online accounts with a fake user profile photo. We show that by focusing on only faces, a more resilient and general-purpose artifact can be detected that allows for the detection of AI-generated faces from a variety of GAN- and diffusion-based synthesis engines, and across image resolutions (as low as 128 × 128 pixels) and qualities.

1. Introduction

The past three decades have seen remarkable advances in the statistical modeling of natural images. The simplest power-spectral model [20] captures the 1/ω frequency magnitude fall-off typical of natural images, Figure 1(a). Because this model does not incorporate any phase information, it is unable to capture detailed structural information. By early 2000, new statistical models were able to capture the natural statistics of both magnitude and (some) phase [25], leading to breakthroughs in modeling basic texture patterns, Figure 1(b).

While able to capture repeating patterns, these models are not able to capture the geometric properties of objects, faces, or complex scenes. Starting in 2017, and powered by large data sets of natural images, advances in deep learning, and powerful GPU clusters, generative models began to capture detailed properties of human faces and objects [16, 18]. Trained on a large number of images from a single category (faces, cars, cats, etc.), these generative adversarial networks (GANs) capture highly detailed properties

![Figure 1. The evolution of statistical models of natural images: (a) a fractal pattern with a 1/ω power spectrum; (b) a synthesized textile pattern [25]; (c) a GAN-generated face [17]; and (d) a diffusion-generated scene with the prompt “a beekeeper painting a self portrait” [1].](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-hh93uwv.png)

of, for example, faces, Figure 1(c), but are constrained to only a single category. Most recently, diffusion-based models [2,26] have combined generative image models with linguistic prompts allowing for the synthesis of images from descriptive text prompts like “a beekeeper painting a self portrait”, Figure 1(d).

Traditionally, the development of generative image models were driven by two primary goals: (1) understand the fundamental statistical properties of natural images; and (2) use the resulting synthesized images for everything from computer graphics rendering to human psychophysics and data augmentation in classic computer vision tasks. Today, however, generative AI has found more nefarious use cases ranging from spam to fraud and additional fuel for disinformation campaigns.

Detecting manipulated or synthesized images is particularly challenging when working on large-scale networks with hundreds of millions of users. This challenge is made even more significant when the average user struggles to distinguish a real from a fake face [24]. Because we are concerned with the use of generative AI in creating fake online user accounts, we seek to develop fast and reliable techniques that can distinguish real from AI-generated faces. We next place our work in context of related techniques.

1.1. Related Work

Because we will focus specifically on AI-generated faces, we will review related work also focused on, or applicable to, distinguishing real from fake faces. There are two broad categories of approaches to detecting AI-generated content [10].

In the first, hypothesis-driven approaches, specific artifacts in AI-generated faces are exploited such as inconsistencies in bilateral facial symmetry in the form of corneal reflections [13] and pupil shape [15], or inconsistencies in head pose and the spatial layout of facial features (eyes, tip of nose, corners of mouth, chin, etc.) [23, 33, 34]. The benefit of these approaches is that they learn explicit, semanticlevel anomalies. The drawback is that over time synthesis engines appear to be – either implicitly or explicitly – correcting for these artifacts. Other non-face specific artifacts include spatial frequency or noise anomalies [5,8,12,21,35], but these artifacts tend to be vulnerable to simple laundering attacks (e.g., transcoding, additive noise, image resizing).

In the second, data-driven approaches, machine learning is used to learn how to distinguish between real and AIgenerated images [11, 29, 32]. These models often perform well when analyzing images consistent with their training, but then struggle with out-of-domain images and/or are vulnerable to laundering attacks because the model latches onto low-level artifacts [9].

We attempt to leverage the best of both of these approaches. By training our model on a range of synthesis engines (GAN and diffusion), we seek to avoid latching onto a specific low-level artifact that do not generalize or may be vulnerable to simple laundering attacks. By focusing on only detecting AI-generated faces (and not arbitrary synthetic images), we show that our model seems to have captured a semantic-level artifact distinct to AI-generated faces which is highly desirable for our specific application of finding potentially fraudulent user accounts. We also show that our model is resilient to detecting AI-generated faces not previously seen in training, and is resilient across a large range of image resolutions and qualities.

This paper is available on arxiv under CC 4.0 license.