Authors:

(1) Gonzalo J. Aniano Porcile, LinkedIn;

(2) Jack Gindi, LinkedIn;

(3) Shivansh Mundra, LinkedIn;

(4) James R. Verbus, LinkedIn;

(5) Hany Farid, LinkedIn and University of California, Berkeley.

Table of Links

2. Data Sets

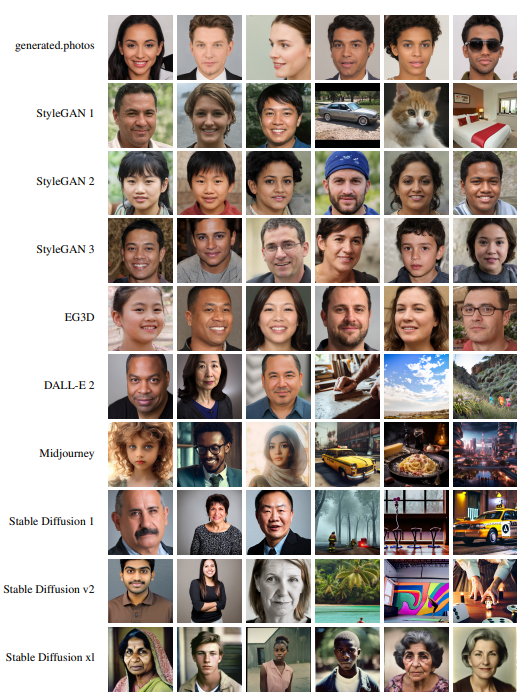

Our training and evaluation leverage 18 data sets consisting of 120,000 real LinkedIn profile photos and 105,900 AI-generated faces spanning five different GAN and five different diffusion synthesis engines. The AI-generated images consist of two main categories, those with a face and those without. Real and synthesized color (RGB) images are resized from their original resolution to 512 × 512 pixels. Shown in Table 1 is an accounting of these images, and shown in Figure 2 are representative examples from each of the AI-generated categories as described next.

2.1. Real Faces

The 120,000 real photos were sampled from LinkedIn users with publicly-accessible profile photos uploaded between January 1, 2019 and December 1, 2022. These accounts showed activity on the platform on at least 30 days (e.g., signed in, posted, messaged, searched) without triggering any fake-account detectors. Given the age and activity on the accounts, we can be confident that these photos are real. These images were of widely varying resolution and quality. Although most of these images are standard profile photos consisting of a single person, some do not contain a face. In contrast, all of the AI-generated images (described next) consist of a face. We will revisit this difference between real and fake images in Section 4.

2.2. GAN Faces

2, and 3, color images were synthesized at a resolution of 1024×1024 pixels and with ψ = 0.5. [1] For EG3D (Efficient Geometry-aware 3D Generative Adversarial Networks), the so-called 3D version of StyleGAN, we synthesized 10,000 images at a resolution of 512×512, with ψ = 0.5, and with random head poses.

A total of 10,000 images at a resolution of 1024 × 1024 pixels were downloaded from generated.photos[2]. These GAN-synthesized images generally produce more professional looking head shots because the network is trained on a dataset of high-quality images recorded in a photographic studio.

2.3. GAN Non-Faces

A total of 5,000 StyleGAN 1 images were downloaded[3] for each of three non-face categories: bedrooms, cars, and cats (the repositories for other StyleGAN versions do not provide images for categories other than faces). These images ranged in size from 512 × 384 (cars) to 256 × 256 (bedrooms and cats).

2.4. Diffusion Faces

We generated 9,000 images from each Stable Diffusion [26] version (1, 2)[4]. Unlike the GAN faces described above, text-to-image diffusion synthesis affords more control over the appearance of the faces. To ensure diversity, 300 faces for each of 30 demographics with the prompts “a photo of a {young, middle-aged, older} {black, east-asian, hispanic, south-asian, white} {woman, man}.” These images were synthesized at a resolution of 512 × 512. This dataset was curated to remove obvious synthesis failures in which, for example, the face was not visible.

An additional 900 images were synthesized from the most recent version of Stable Diffusion (xl). Using the same demographic categories as before, 30 images were generated for each of 30 categories, each at a resolution of 768 × 768.

We generated 9,000 images from DALL-E 2 [5], consisting of 300 images for each of 30 demographic groups. These images were synthesized at a resolution of 512×512 pixels.

A total of 1,000 Midjourney[6] images were downloaded at a resolution of 512 × 512. This images were manually curated to consist of only a single face.

2.5. Diffusion Non-Faces

We synthesized 1,000 non-face images from each of two versions of Stable Diffusion (1, 2). These images were generated using random captions (generated by ChatGPT) and were manually reviewed to remove any images containing a person or face. These images were synthesized at a resolution of 600 × 600 pixels. A similar set of 1,000 DALL-E 2 and 1,000 Midjourney images were synthesized at a resolution of 512 × 512.

2.6. Training and Evaluation Data

The above enumerated sets of images are split into training and evaluation as follows. Our model (described in Section 3) is trained on a random subset of 30,000 real faces and 30,000 AI-generated faces. The AI-generated faces are comprised of a random subset of 5,250 StyleGAN 1, 5,250 StyleGAN 2, 4,500 StyleGAN 3, 3,750 Stable Diffusion 1, 3,750 Stable Diffusion 2, and 7,500 DALL-E 2 images.

We evaluate our model against the following:

• A set of 5,000 face images from the same synthesis engines used in training (StyleGAN 1, StyleGAN 2, StyleGAN 3, Stable Diffusion 1, Stable Diffusion 2, and DALL-E 2).

• A set of 5,000 face images from synthesis engines not used in training (Generated.photos, EG3D, Stable Diffusion xl, and Midjourney).

• A set of 3,750 non-face images from each of five synthesis engines (StyleGAN 1, DALL-E 2, Stable Diffusion 1, Stable Diffusion 2, and Midjourney).

• A set of 13,750 real faces.

This paper is available on arxiv under CC 4.0 license.

[1] The StyleGAN parameter ψ (typically in the range [0, 1]) controls the truncation of the seed values in the latent space representation used to generate an image. Smaller values of ψ provide better image quality but reduce facial variety. A mid-range value of ψ = 0.5 produces relatively artifact-free faces, while allowing for variation in the gender, age, and ethnicity in the synthesized face.

[2] https://generated.photos/faces

[3] https://github.com/NVlabs/stylegan)

[4] https : / / github . com / Stability - AI / StableDiffusion

[5] https://openai.com/dall-e-2

[6] https://www.midjourney.com